Parallel Computing Models

1. Shared Memory Model (without threads)

2. Threads Model

3. Distributed Memory / Message Passing Model

4. Data Parallel Model

5. Hybrid Model

6. SIMD and MIMD

Shared Memory Model (without threads):

This programming model, processes/tasks share a common address space, which they read and write to asynchronously.

Various mechanisms such as locks/semaphores are used to control access to the shared memory, resolve contentions and to prevent race conditions and deadlocks.

Disadvantages in terms of performance:

- Keeping data local to the process that works on it conserves memory accesses, cache refreshes and bus traffic that occurs when multiple processes use the same data.

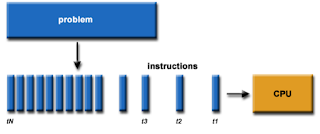

Threads Model:

This programming model is a type of shared memory programming.

In this model of parallel programming, a single "heavy weight" process can have multiple "light weight", concurrent execution paths.

For example,

- The main program a.out is scheduled to run by the native operating system. a.out loads and acquires all of the necessary system and user resources to run. This is the "heavyweight" process.

- a.out performs some serial work and then creates a number of tasks (threads) that can be scheduled and run by the operating system concurrently.

- Each thread has local data, but also, shares the entire resources of a.out. This saves the overhead associated with replicating a program's resources for each thread ("lightweight"). Each thread also benefits from a global memory view because it shares the memory space of a.out.

- A thread's work may best be described as a subroutine within the main program. Any thread can execute any subroutine at the same time as other threads.

- Threads communicate with each other through global memory (updating address locations). This requires synchronization constructs to ensure that more than one thread is not updating the same global address at any time.

- Threads can come and go, but a.out remains present to provide the necessarily shared resources until the application has completed.

Implementations:

The programmer is responsible for determining the parallelism (although compilers can sometimes help)

Unrelated standardization efforts have resulted in two very different implementations of threads: POSIX Threads and OpenMP.

- POSIX Threads

- Specified by the IEEE POSIX 1003.1c standard (1995). C Language only.

- Part of Unix/Linux operating systems

- Library based

- Commonly referred to as Pthreads.

- Very explicit parallelism; requires significant programmer attention to detail.

- OpenMP

- Industry standard jointly defined and endorsed by a group of major computer hardware and software vendors, organizations, and individuals.

- Compiler directive based

- Portable / multi-platform, including Unix and Windows platforms

- Available in C/C++ and Fortran implementations

- Can be very easy and simple to use - provides for "incremental parallelism". Can begin with the serial code.

- Other threaded implementations exist, such as Microsoft's

More Information:

Distributed Memory / Message Passing Model:

This model demonstrates the following characteristics:

- A set of tasks that use their own local memory during computation. Multiple tasks can reside on the same physical machine and/or across an arbitrary number of machines.

- Tasks exchange data through communications by sending and receiving messages.

- Data transfer usually requires cooperative operations to be performed by each process. For example, a send operation must have a matching receive operation.

Implementations:

From a programming perspective, message passing implementations usually comprise a library of subroutines. Calls to these subroutines are embedded in the source code. The programmer is responsible for determining all parallelism.

Part 1 of the Message Passing Interface (MPI) was released in 1994. Part 2 (MPI-2) was released in 1996 and MPI-3 in 2012. All MPI specifications are available on the web at http://www.mpi-forum.org/docs/.

Data Parallel Model:

The data parallel model demonstrates the following characteristics:

- Address space is treated globally

- Most of the parallel work focuses on performing operations on a data set. The data set is typically organized into a common structure, such as an array or cube.

- A set of tasks work collectively on the same data structure, however, each task works on a different partition of the same data structure.

- Tasks perform the same operation on their partition of work, for example, "add 4 to every array element".

Hybrid Model:

- A hybrid model combines more than one of the previously described programming models.

- Currently, a common example of a hybrid model is the combination of the message passing model (MPI) with the threads model (OpenMP).

- Threads perform computationally intensive kernels using local, on-node data

- Communications between processes on different nodes occurs over the network using MPI

- This hybrid model lends itself well to the most popular (currently) hardware environment of clustered multi/many-core machines.

- Another similar and increasingly popular example of a hybrid model is using MPI with CPU-GPU (Graphics Processing Unit) programming.

- MPI tasks run on CPUs using local memory and communicating with each other over a network.

- Computationally intensive kernels are off-loaded to GPUs on-node.

- Data exchange between node-local memory and GPUs uses CUDA (or something equivalent).

- Other hybrid models are common:

- MPI with Pthreads

- MPI with non-GPU accelerators

- ...

SPMD and MPMD:

Single Program Multiple Data (SPMD):

- SPMD is actually a "high level" programming model that can be built upon any combination of the previously mentioned parallel programming models.

- SINGLE PROGRAM: All tasks execute their copy of the same program simultaneously. This program can be threads, message passing, data parallel or hybrid.

- MULTIPLE DATA: All tasks may use different data

- SPMD programs usually have the necessary logic programmed into them to allow different tasks to branch or conditionally execute only those parts of the program they are designed to execute. That is, tasks do not necessarily have to execute the entire program - perhaps only a portion of it.

- The SPMD model, using message passing or hybrid programming, is probably the most commonly used parallel programming model for multi-node clusters.

Multiple Program Multiple Data (MPMD):

- Like SPMD, MPMD is actually a "high level" programming model that can be built upon any combination of the previously mentioned parallel programming models.

- MULTIPLE PROGRAM: Tasks may execute different programs simultaneously. The programs can be threads, message passing, data parallel or hybrid.

- MULTIPLE DATA: All tasks may use different data

- MPMD applications are not as common as SPMD applications, but may be better suited for certain types of problems, particularly those that lend themselves better to functional decomposition than domain decomposition.

|

|